These several recent years have seen the rise of crowdsourcing as an exciting new tool for getting things done. For many, it was a way to get tedious tasks done quickly, such as transcribing audio. For many others, it was a way to get data: labeled image data, transcription correction data, and so on. But there is also a layer of meta-inquiry: what constitutes crowdsourcing? Who is in the crowd, and why? What can they accomplish, and how might the software that supports crowdsourcing be designed in a way to help them accomplish more?

Each of the last two conferences I have attended, CSCW2012 and UIST2011, had a “crowdsourcing session,” spanning a range of crowdsourcing-related research. But only a short while before that, the far bigger CHI conference contained only one or two instances of “crowdsourcing papers.” So what happened in the last few years?

At some point in the last decade, crowdsourcing emerged both as a method for getting lots of tedious work done cheaply, and a field of inquiry that resonated with human-computer interaction researchers. Arguably, this point historically coincided with the unveiling of Amazon Mechanical Turk platform, which allowed employers, or “requesters,” to list small, low-paid tasks, or “human-intelligence tasks (HITs)” for anonymous online contractors, or “workers,” to complete. In Amazon’s words, this enabled “artificial artificial intelligence” – the capacity to cheaply get answers to questions that cannot be automated.

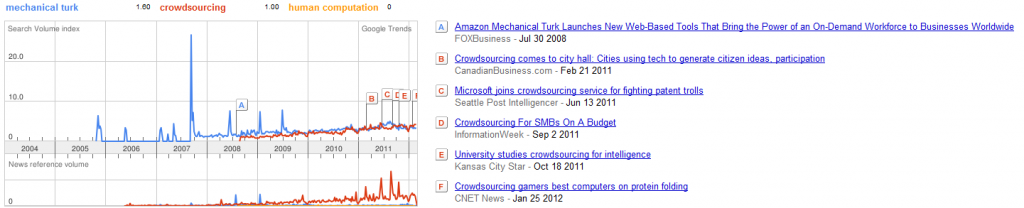

And so this crowdsourcing thing took academic literature by storm, evidenced by growth in yearly additions to work exposed via Google scholar, as the related terms “crowdsourcing,” “mechanical turk,” and “human computation” seemed to grow rapidly at roughly the same time:

This point in time, barring what could be argued as noise in the extremely coarse metric of Google scholar yearly result count, pretty much coincides with the 2008 unveiling of Mechanical Turk:

There is a distinction between HCI research that (1) uses crowdsourcing; (2) investigates crowdsourcing as a platform is capable of, which is yet distinct from that which (3) investigates what crowdsourcing is, or ought to be. Kittur, Chi, and Suh authored one of the first papers of the second variety, explaining how crowdsourcing – via Mechanical Turk in particular – could be used as a method for running user studies distinct from typical approaches in HCI literature (“Crowdsourcing User Studies with Mechanical Turk,” CHI 2007). Later, at CHI2010, a paper of the third variety – characterizing the demographics of Mechanical Turk workforce – was presented at an alt.chi session by Ross et al from UC Irvine (“Who are the Crowdworkers? Shifting Demographics in Mechanical Turk,” alt.chi 2010). Since, the third kind of research has begun to re-examine aspects of crowdsourcing that have been taken as nearly axiomatic as a result of its initial synonymy with Mechanical Turk.

Adversarial workers and the “arms race.” The kind of task that today is said to use crowdsourcing was already happening well before Mechanical Turk took the world by storm. Luis von Ahn had launched the ESP game and reCAPTCHA several years before. Still, it wasn’t until 2008 that Science published the paper on reCAPTCHA (“reCAPTCHA: Human-Based Character Recognition via Web Security Measures,” Science online 2008). Recently, the term human computation and crowdsourcing became used in lieu of one another frequently enough to warrant a 2011 survey of what those words mean in CHI by Quinn and Bederson* (“Human computation: a survey and taxonomy of a growing field,” CHI2011). The historical roots of crowdsourcing in Amazon Mechanical Turk seem to have resulted in a view of the stranger-workers as particularly adversarial strangers who can be used to generate data for any and all tasks. This has led to a body of research sometimes referred to as the “crowdsourcing arms race:” the tension between the worker’s [presumed] desire to do as little work as quickly as possible, and the requester’s [presumed] desire to paid as little as possible for as much work as possible. For example, quality control has moved from asking workers to fill out surveys to recently using biometrics to identify intentionally shoddy work, or “cheating” (J. Rzeszotarski and A. Kittur. “Instrumenting the Crowd: Using Implicit Behavioral Measures to Predict Task Performance,” UIST2011).

Beyond monetary incentives. Another artifact of historical connection into Amazon Mechanical Turk has been conflating crowdsourcing and human computation with paid work markets, and of monetary incentives. But there are other models – such as the initial Luis von Ahn games, where human computation is fuelled by fun. Or Jeff Bigham’s VizWiz, where motivation can border altruism – helping blind people interpret certain objects quickly – despite having monetary incentives via Mechanical Turk. Salman Ahmad and colleagues’ work on Jabberwocky crowd programming environment is a particularly deliberate departure from the monetary incentive model, recasting itself as “structured social computing” despite overlapping computational elements with crowdsourcing (“The Jabbrwocky Programming Environment for Structured Social Computing,” UIST2011). When I saw this presentation at UIST2011, it was followed by a question: wouldn’t you get more critical mass if you pay workers? and the response was telling of the future direction of the view of crowdsourcing as a field of inquiry: yes, but the second one incentivizes a system monetarily, it’s impossible to move away into other incentive structures, which is what we want to investigate more structured social computing. (Disclaimer: this is a many-months-old paraphrase!)

Reconsidering the notion of collaboration in crowdsourcing. Along with the assumption of adversarial behavior came the assumption of individual, asynchronous work. To enable accomplishing more high-level, larger tasks, research has begun to consider alternative models of collaboration within crowdsourcing platforms. Jeff Bigham’s work in trying to get masses of strangers to negotiate and operate an interface simultaneously (introduced by Lasecki et al, “Real-time Crowd Control of Existing Interfaces,” UIST2011). In my mind, this sits in contrast to work on platforms exploring novel approaches to organizing small work contributions in complex workflows to enable the completion of more high-level tasks, such as three CSCW2012 publications: Turkomatic by Kulkarni et al; CrowdWeaver by Kittur et al; and Shepherd by Dow et al; as well as a UIST2011 publication by Kittur et al on CrowdForge.

* For example, in a possibly overly-broad view, Wikipedia is sort of like a really unstructured crowdsourcing, because there’s these people doing small tasks to contribute to the construction of an accurate and complex encyclopedia. However, this more aptly termed social computing, according to Quinn and Bederson’s taxonomical distinctions.

Written by lab member, student, and deliciousness enthusiast Katie Kuksenok. Read more of her posts here on the SCCL blog, or on her own research blog, interactive everything.