Download

$N source code: JavaScript, C#

Pseudocode: $N, $N-Protractor

Multistroke gesture logs: XML

Papers: $N, $N-Protractor

This software is distributed under the New BSD License agreement.

About

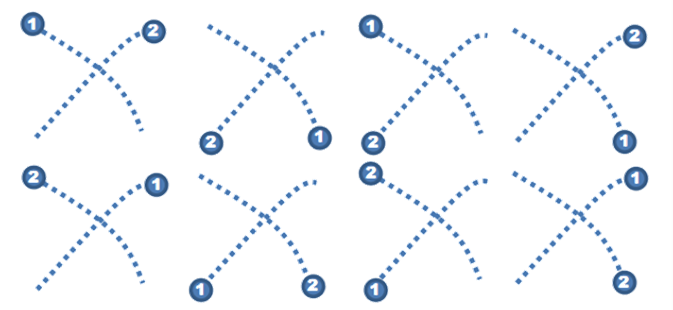

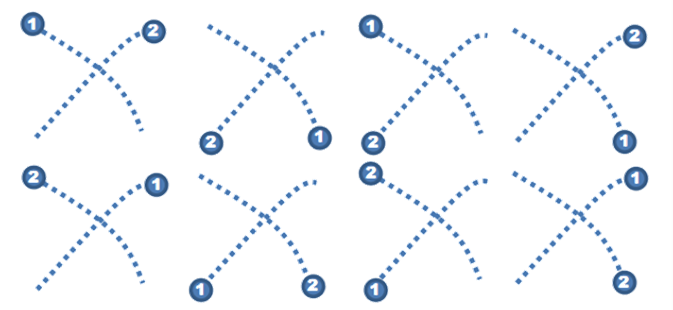

The $N Multistroke Recognizer is a 2-D multistroke recognizer designed for rapid prototyping of gesture-based

user interfaces. $N is built upon the $1 Unistroke

Recognizer. $N automatically generalizes examples of multistrokes to encompass all possible stroke orders and

directions, meaning you can make and define multistrokes using any stroke order and direction you wish, provided

you begin at either endpoint of each component stroke, and $N will generalize so as to recognize other ways to

articulate that same multistroke. A version of $N utilizing

Protractor, optional here, improves $N's speed.

The $P Point-Cloud Recognizer performs unistroke and multistroke recognition without the

combinatoric overhead of $N, as it ignores stroke number, order, and direction. The $Q Super-Quick Recognizer

extends the $P recognizer for use on low-powered mobiles and wearables, and is a whopping 142× faster and slightly more accurate.

You might wish to read about the limitations of $N, which $P and $Q largely avoid.

The $-family recognizers have been built into numerous projects and even industry prototypes,

and have had many follow-ons by others. Read about the $-family's impact.

Demo

In the demo below, only one multistroke template is loaded for each of the 16 gesture types. You can add additional

multistrokes as you wish, and even define your own custom multistrokes.

|

|

Our Gesture Software Projects

- $Q: Super-quick multistroke recognizer - optimized for low-power mobiles and wearables

- $P+: Point-cloud multistroke recognizer - optimized for people with low vision

- $P: Point-cloud multistroke recognizer - for recognizing multistroke gestures as point-clouds

- $N: Multistroke recognizer - for recognizing simple multistroke gestures

- $1: Unistroke recognizer - for recognizing unistroke gestures

- AGATe: AGreement Analysis Toolkit - for calculating agreement in gesture-elicitation studies

- GHoST: Gesture HeatmapS Toolkit - for visualizing variation in gesture articulation

- GREAT: Gesture RElative Accuracy Toolkit - for measuring variation in gesture articulation

- GECKo: GEsture Clustering toolKit - for clustering gestures and calculating agreement

Our Gesture Publications

-

Vatavu, R.-D. and Wobbrock, J.O. (2022).

Clarifying agreement calculations and analysis for end-user elicitation studies.

ACM Transactions on Computer-Human Interaction 29 (1). Article No. 5.

-

Vatavu, R.-D., Anthony, L. and Wobbrock, J.O. (2018).

$Q: A super-quick, articulation-invariant stroke-gesture recognizer for low-resource devices.

Proceedings of the ACM Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI '18).

Barcelona, Spain (September 3-6, 2018).

New York: ACM Press. Article No. 23.

-

Vatavu, R.-D. (2017).

Improving gesture recognition accuracy on touch screens for users with low vision.

Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI '17).

Denver, Colorado (May 6-11, 2017).

New York: ACM Press, pp. 4667-4679.

-

Vatavu, R.-D. and Wobbrock, J.O. (2016).

Between-subjects elicitation studies: Formalization and tool support.

Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI '16).

San Jose, California (May 7-12, 2016).

New York: ACM Press, pp. 3390-3402.

-

Vatavu, R.-D. and Wobbrock, J.O. (2015).

Formalizing agreement analysis for elicitation studies: New measures, significance test, and toolkit.

Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI '15).

Seoul, Korea (April 18-23, 2015).

New York: ACM Press, pp. 1325-1334.

-

Vatavu, R.-D., Anthony, L. and Wobbrock, J.O. (2014).

Gesture heatmaps: Understanding gesture performance with colorful visualizations.

Proceedings of the ACM International Conference on Multimodal Interfaces (ICMI '14).

Istanbul, Turkey (November 12-16, 2014).

New York: ACM Press, pp. 172-179.

-

Vatavu, R.-D., Anthony, L. and Wobbrock, J.O. (2013).

Relative accuracy measures for stroke gestures.

Proceedings of the ACM International Conference on Multimodal Interfaces (ICMI '13).

Sydney, Australia (December 9-13, 2013).

New York: ACM Press, pp. 279-286.

-

Anthony, L., Vatavu, R.-D. and Wobbrock, J.O. (2013).

Understanding the consistency of users' pen and finger stroke gesture articulation.

Proceedings of Graphics Interface (GI '13).

Regina, Saskatchewan (May 29-31, 2013).

Toronto, Ontario: Canadian Information Processing Society, pp. 87-94.

-

Vatavu, R.-D., Anthony, L. and Wobbrock, J.O. (2012).

Gestures as point clouds: A $P recognizer for user interface prototypes.

Proceedings of the ACM International Conference on Multimodal Interfaces (ICMI '12).

Santa Monica, California (October 22-26, 2012).

New York: ACM Press, pp. 273-280.

-

Anthony, L. and Wobbrock, J.O. (2012).

$N-Protractor: A fast and accurate multistroke recognizer.

Proceedings of Graphics Interface (GI '12).

Toronto, Ontario (May 28-30, 2012).

Toronto, Ontario: Canadian Information Processing Society, pp. 117-120.

-

Anthony, L. and Wobbrock, J.O. (2010).

A lightweight multistroke recognizer for user interface prototypes.

Proceedings of Graphics Interface (GI '10).

Ottawa, Ontario (May 31-June 2, 2010).

Toronto, Ontario: Canadian Information Processing Society, pp. 245-252.

-

Wobbrock, J.O., Wilson, A.D. and Li, Y. (2007).

Gestures without libraries, toolkits or training: A $1 recognizer for user interface prototypes.

Proceedings of the ACM Symposium on User Interface Software and Technology (UIST '07).

Newport, Rhode Island (October 7-10, 2007).

New York: ACM Press, pp. 159-168.

Copyright © 2010-2022 Jacob O. Wobbrock. All rights reserved.

Last updated January 8, 2022.