Many UbiComp applications are based on sensing and inference approaches that have varying degrees of accuracy, and might report accuracy as a percentage, such as 85%. However, how do we know whether this performance is good enough? Is this level of uncertainty actually tolerable to users of the intended application? Does the same classifier used for a different application area (e.g., energy monitoring vs. health monitoring) require different accuracy levels? Do people weight precision and recall equally?

We developed and validated a survey instrument that can systematically answer such questions using scenarios and a measure of acceptability of accuracy, based on the Technology Acceptance Model. This survey tool can help machine learning systems developers to identify a target classification accuracy before expending the energy into improving performance.

The paper that describes the survey approach and its validation were published at CHI 2015. The code for generating the surveys is available on GitHub.

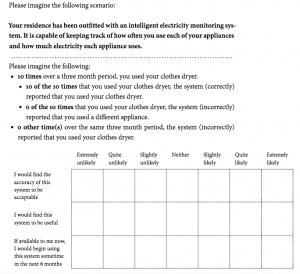

Excerpt from example survey. User is presented with a scenario and then an automatically generated accuracy is presented. Users must rate how acceptable this system would be. The result is an ROC curve that shows how users value precision vs. recall.

People

Matthew Kay

Shwetak Patel

Julie Kientz

Publications

- Kay, M., Patel, S. N., & Kientz, J. A. (2015, April). How Good is 85%?: A Survey Tool to Connect Classifier Evaluation to Acceptability of Accuracy. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 347-356). ACM.