by Peter D. Huck

In STEP-WISE, my co-instructors and I sought to leverage our experience with computer programming to instruct fourth year biology students in what type of analysis was possible with the aid of open-source computational resources, like Python. We sought to teach students how to think about and approach problems relevant in our research. Little did we know that we were adhering to an approach that the education literature calls teaching with “authentic problems”.

A perennial concern in higher education is how to ensure that recent college graduates can solve real-world problems they encounter, despite having completed a program of rigorous course work. Price and coworkers (2021) address this concern. They hypothesize that the ability to solve problems is assessed by challenging exercises with well-defined answers reached by straightforward analysis, but do not require the use of judgement to make decisions based on limited or incomplete information. Therefore, to improve students’ ability to solve problems, instructors should offer problems that are more unstructured, lacking a clear solution path or that are not certain to have any solution at all, to come closer to real world situations. These are the problems that, according to Price et al. are authentic.

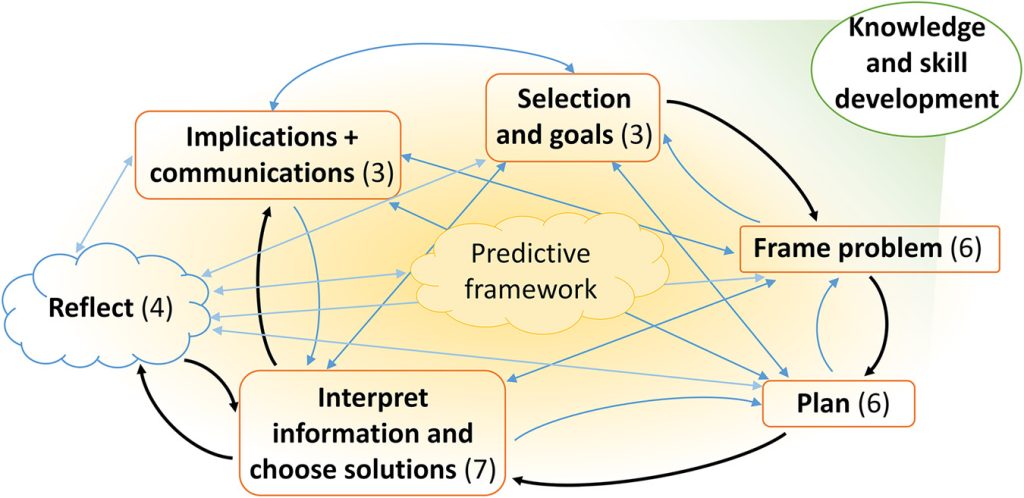

Price et al. ask “What are the decisions experts make in solving authentic problems, and to what extent is this set of decision to be made consistent both within and across [STEM] disciplines?” They interviewed 52 successful scientists and engineers (“experts”) spanning different disciplines, including biology and medicine, asking them to describe how they solve typical, but important problems in their work. When they analyzed interviews, they identified specific decisions the experts made, and then classified them into broad categories (problem selection, framing the problem, process for solving, interpret and choose solutions, reflection, communication of results) with an array of sub-categories in each. Although certain domain specific decisions were observed, between 75-90% of the classified decisions were common to engineers, physical scientist, medical professionals, and biologists. What appeared to matter was not the content of the problem, but rather the decisions that professionals have practice making and that these decisions are largely universal across disciplines.

This study has clear implications for instructors seeking to implement active learning methods in their classrooms. Structuring activities that focus on certain aspects of the problem-solving process, targeting expert decision making could be envisaged as a way of training students to make difficult decisions in the face of uncertainty. On the other hand, strictly adhering to the expert approach and forcing students into a decision-making paradigm may impede the natural process of trial and error, success and failure, by which an expert decision-making process is cultivated. Our STEP experience itself could be seen as a problem-solving exercise, informed by experts in the science of education field, indicating that the results of Price et al. may also be applicable in educator training.

Reference:

Price, A. M., Kim, C. J., Burkholder, E. W., Fritz, A. V. and Wieman, C. E. A Detailed Characterization of the Expert Problem-Solving Process in Science and Engineering: Guidance for Teaching and Assessment. CBE – Life Sciences Education. 20 (3). 2021. https://www.lifescied.org/doi/full/10.1187/cbe.20-12-0276

Another reading on decision making processes in education, specifically the notion of intuition:

Kryjevskaia, M. , Heron, P. R. L. and Heckler, A. F. Intuitive of rational? Students and experts need to be both. Physics Today. August 2021. https://physicstoday.scitation.org/doi/abs/10.1063/PT.3.4813